Agentic Systems | HOTL design patterns…

2/3/20253 min read

The architect in me was born during the era of mainframes, raised amidst the era of microservices, and now finds itself living alongside agents. The evolution of architecture has always mirrored the challenges of its time. Today, in the era of agentic systems, we find ourselves needing to borrow from — yet adapt — the design patterns honed during the microservices boom. Some patterns carry over beautifully, others need tweaking, and entirely new ones emerge to address the unique challenges posed by agents.

This reflection makes me wonder: Is it time to write a book on “Design Patterns for the Agentic World”? Perhaps. Until then, I’ll start small — with this series of articles exploring design patterns in agentic systems. This article focuses on Human-on-the-Loop (HOTL) patterns and their importance in this new architectural paradigm. In Part 1, I’ll define the patterns, and in Part 2, I’ll delve into their implementation using frameworks like Langgraph, CrewAI etc.

Human-on-the-Loop (HOTL) : Why does it matter in agentic systems

Agents powered by language models are inherently probabilistic. Every decision, every action, every sequence they generate is based on probability. While this makes them powerful, it also makes them unpredictable. They can deviate from intended objectives — and when they do, human intervention becomes crucial to correct their trajectory.

This is where HOTL steps in. It provides a safety net, ensuring that agents stay aligned with goals and objectives while maintaining accountability. In agentic systems, HOTL is not a luxury — it’s a necessity.

HOTL patterns:

1. Zero-Turn Interaction

In this pattern, agents are designed to require human approval before making any decisions or taking actions. The system pauses for human review and resumes based on the feedback provided.

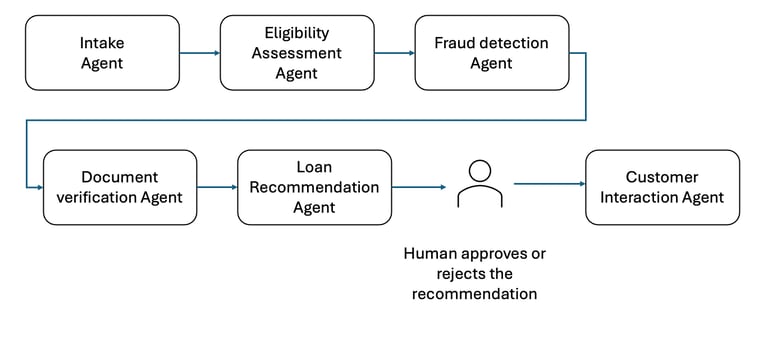

Example: Consider multi-agent system tasked with processing loan applications. The various agents collaborate in the system to gather required information to determine if the loan can be approved. But before approving or rejecting an application, the system must present its assessment to a human officer for validation, ensuring compliance with regulatory standards .

In the above scenario, the feedback from the human can be stored in a long term memory which can be used by the system in the subsequent execution of the flow, thus improving each successive execution.

2. Single-Turn Interaction

Here, the agent seeks human input only when it encounters ambiguity. It pauses, asks for clarification, and uses the feedback to regenerate its output.

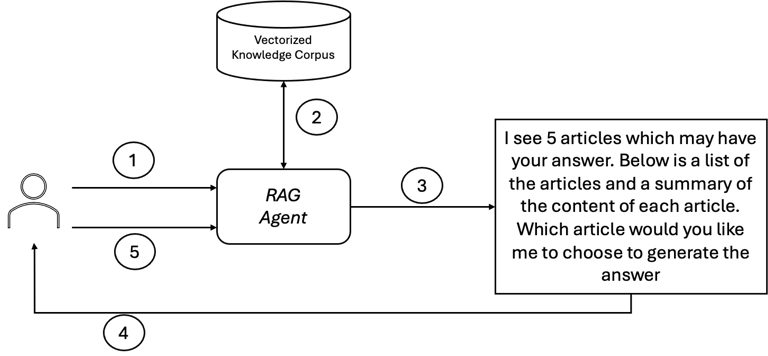

Example: An AI-powered customer service chatbot encounters a query that matches multiple knowledge base articles. Unsure of the best article, it consults a human agent to determine the most appropriate source for the answer, thereby improving the accuracy of the final response.

The Flow:

The user asks the chatbot (RAG agent) a question.

The chatbot searches the vectorized KB for relevant sources.

It identifies five closely matching sources but can’t determine the most appropriate one.

The chatbot asks the human for guidance, providing a summarized view of the articles.

The human selects the best source, enabling the chatbot to generate an accurate response.

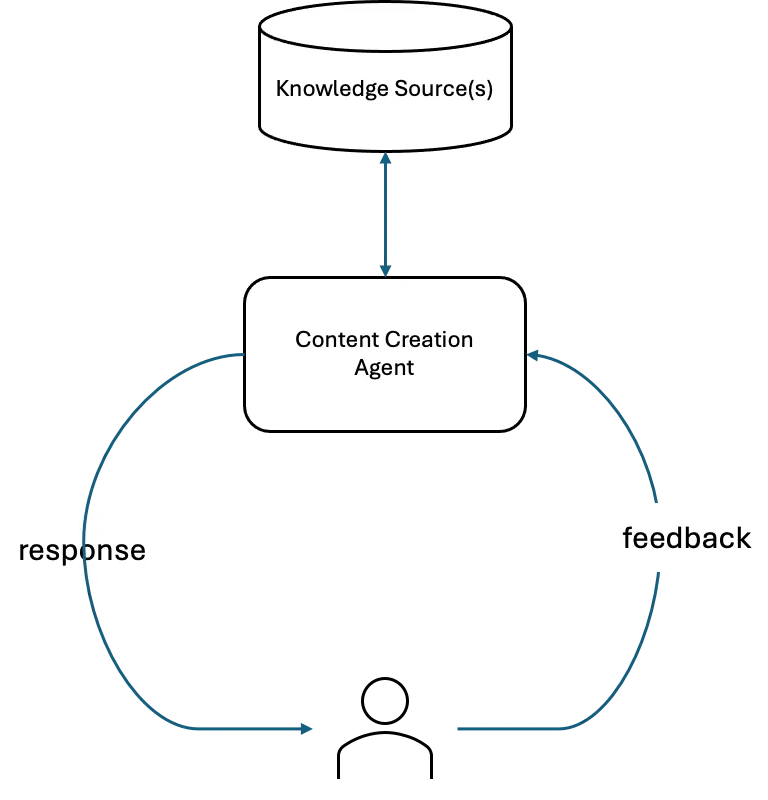

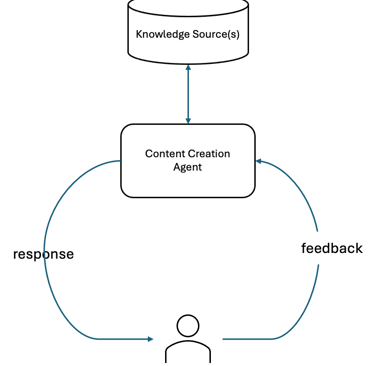

3. Multi-Turn Interaction

This pattern involves iterative collaboration between the agent and a human. The agent presents an initial output, the human provides feedback, and the agent refines its response based on that feedback. This loop continues until the desired outcome is achieved.

Example: In content creation, an AI tool drafts an article and shares it with a human editor. The editor provides feedback, and the agent revises the draft accordingly. This collaborative loop continues until the content meets the desired quality standards.

Conclusion

Each HOTL pattern offers a unique balance of latency and cost. The right pattern depends on the specific use case. As architects, it’s our job to assess these trade-offs and design systems that optimize for both efficiency and effectiveness. After all, the future of agentic systems is not just about what agents can do — but about how efficiently they can run in a cost effective manner.